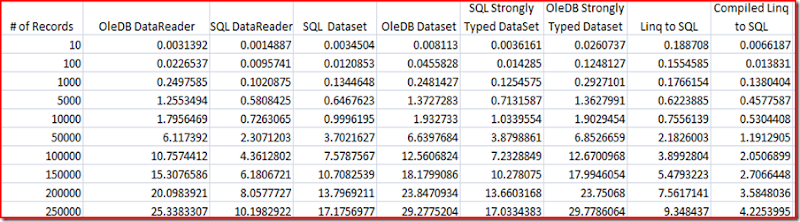

I’m currently working with a client that supports an application originally built around 2003 so it was targeting the 1.1 Framework. Last summer they upgraded to the 3.5 Framework, but they have not yet taken advantage of the new features that can help them improve their performance. Digging through their code, I found a few things that can be changed pretty easily, but before just telling them “do X because it will help you”, I wanted to run some metrics based on their specific scenario. So I picked one of their main tables and loaded it with different sets of data so that I could test the length of time that it takes to load records into memory for the following counts: 10, 100, 1k, 5k, 10k, 50k, 100k, 150k, 200k, 250k. The table has 89 columns, with the majority of them being nullable (so need to account for DBNull when loading the data). Currently they are using mostly Datasets and Strongly-typed datasets to load data, and they are using the OleDbProvider since they used to target more than just SQl Server. The huge use of datasets can be attributed to Microsoft’s big push for them back then…that was before generics so you either had to choose between ArrayList, your own collection type, or strongly-typed datasets to move data around your app. So here are the results:

DataSet – OleDbDataAdapter: Use the data adapter to load records directly into a DataSet:

string _Sql = "SELECT * FROM TestTable"; DataSet _Dataset = new DataSet(); using (OleDbConnection _Connection = Connection.CreateOleDbConnection()) using (OleDbDataAdapter _Adapter = new OleDbDataAdapter(_Sql, _Connection)) { _Adapter.FillSchema(_Dataset, SchemaType.Source); _Adapter.Fill(_Dataset); _Adapter.SelectCommand.ResetCommandTimeout(); _Adapter.SelectCommand.Cancel(); }

# Records – Execution Time (seconds)

10 – 0.008113

100 – 0.0455828

1,000 – 0.2481427

5,000 – 1.3727283

10,000 – 1.932733

50,000 – 6.6397684

100,000 – 12.5606824

150,000 – 18.1799086

200,000 – 23.8470934

250,000 – 29.2775204

DataSet – SqlDataAdapter: Same code as above but with a SqlConnection and a SqlDataAdapter. Just switching the adapter made a really big difference, one that you may not be able to feel if you are only dealing with one record as it’s milliseconds difference, as the recordset grows, so does the difference. And remember, I’m not running any fancy queries or doing anything special that the SQL Adapter would necessarily streamline, but it’s obvious that it knows how to talk to SQL a lot better even on a simple scenario.

# Records – Execution Time (seconds)

10 – 0.0034504

100 – 0.0120853

1,000 – 0.1344648

5,000 – 0.6467623

10,000 – 0.9996195

50,000 – 3.7021627

100,000 – 7.5787567

150,000 – 10.7082539

200,000 – 13.7969211

250,000 – 17.1756977

Strongly typed-datasets: The results were about the same as using a “regular” dataset, with the added gain that now you can do compile-time checking of your code that references the data:

# Records – OleDb DataSet – SqlProvider

10 – 0.0036161 – 0.0260737

100 – 0.014285 – 0.1248127

1000 – 0.1254575 – 0.2927101

5000 – 0.7131587 – 1.3627991

10000 – 1.0339554 – 1.9029454

50000 – 3.8798861 – 6.8526659

100000 – 7.2328849 – 12.6700968

150000 – 10.278075 – 17.9946054

200000 – 13.6603168 – 23.75068

250000 – 17.0334383 – 29.7786064

SqlDataReader: I was definitely expecting this to be a lot faster, and it was. But then I had to take into account that I want to provide the same functionality that a strongly-typed dataset gives you. So I created a data-object that would get hydrated with the data that is returned with the DataReader and then load that into a List

List<MyTestType> _TestList = new List<MyTestType>(); string _Sql = "SELECT * FROM TestTable"; using (SqlConnection _Connection = Connection.CreateSqlConnection()) using (SqlCommand _Command = new SqlCommand(_Sql, _Connection)) using (SqlDataReader _Reader = _Command.ExecuteReader(System.Data.CommandBehavior.CloseConnection)) { while (_Reader.Read()) { _TestList.Add( new MyTestType { Name = GetString(_Reader, TableOrdinals.Name), AccountNumber = GetInt32(_Reader, TableOrdinals.AccountNumber), IsEnabled = GetBoolean(_Reader, TableOrdinals.IsEnabled), ...and so on... }); } }

# Records – OleDbDataReader(secs) – SqlDataReader(secs)

10 – 0.0031392 – 0.0014887

100 – 0.0226537 – 0.0095741

1000 – 0.2497585 – 0.1020875

5000 – 1.2553494 – 0.5808425

10000 – 1.7956469 – 0.7263065

50000 – 6.117392 – 2.3071203

100000 – 10.7574412 – 4.3612802

150000 – 15.3076586 – 6.1806721

200000 – 20.0983921 – 8.0577727

250000 – 25.3383307 – 10.1982922

Linq to SQL: I added LINQ to SQL classes through Visual Studio and I added my table from Server Explorer. Then I used the DataContext to get to my data and returned all the data as a List. Linq to SQL uses delayed execution so I had to do that in order for it to go through all the records and return them. The results were faster than a DataReader, not by a lot, but with the added bonus that I don’t have to create my data type and I don’t have to worry about DbNull checking and other plumbing.

using (DataClasses1DataContext _Context = new DataClasses1DataContext()) { _Context.TestTableRecords.ToList(); }

# Records – Execution Time(secs)

10 – 0.188708

100 – 0.1554585

1000 – 0.1766154

5000 – 0.6223885

10000 – 0.7556139

50000 – 2.1826003

100000 – 3.8992804

150000 – 5.4793223

200000 – 7.5617141

250000 – 9.348437

Compiled Linq to SQL: So I thought I was done, afterall I had done what I had set out to do, but then one of my business partners Marius, pointed me to an article that mentioned Compiled Linq to SQL, which then led me to this MSDN blog post that describes what it is, so I gave it a shot and WOW!!! Basically, it translates your code into SQL the first time and then keeps your generated SQL to be reused. So using the same scenario:

private static Func<DataClasses1DataContext, IQueryable<TestTable>> SelectTestTableCompiledQuery = System.Data.Linq.CompiledQuery.Compile( (DataClasses1DataContext contextLocal) => from q in contextLocal.TestTableRecords select q);

and then from within your data-gathering method:

using (DataClasses1DataContext _Context = new DataClasses1DataContext()) { SelectTop10CompiledQuery(_Context); }

# Records – Execution Time(secs)

10 – 0.0066187

100 – 0.013831

1000 – 0.1380404

5000 – 0.4577587

10000 – 0.5304408

50000 – 1.1912905

100000 – 2.0506899

150000 – 2.7066448

200000 – 3.5848036

250000 – 4.2253995

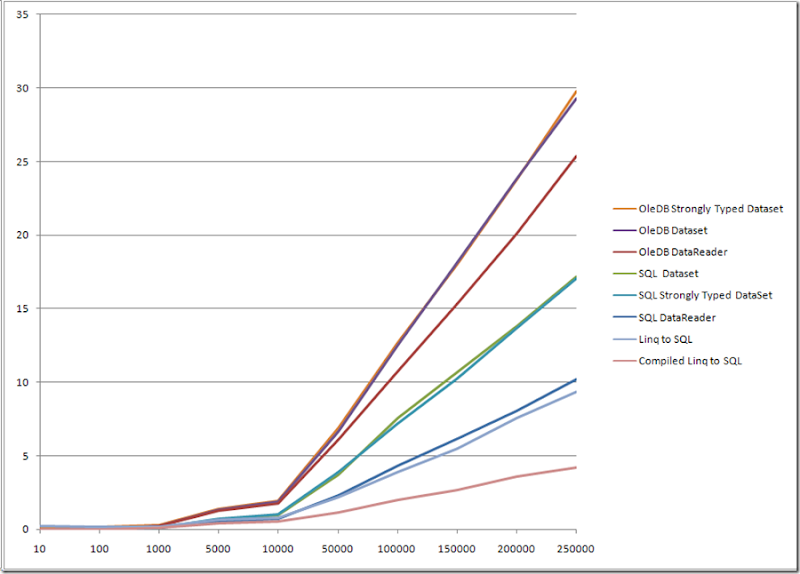

So I can get a huge performance increase by taking advantage of the newer technologies, but just by making small changes such as using the right provider can make a difference. Obviously, we can’t just blindly start making changes left and right, use your best judgement and tackle areas where you will get the most benefit. And always take a look at what’s happening in SQL by using Profiler…Linq can sometimes generate some funny stuff!

Here are all the results and a nice picture: